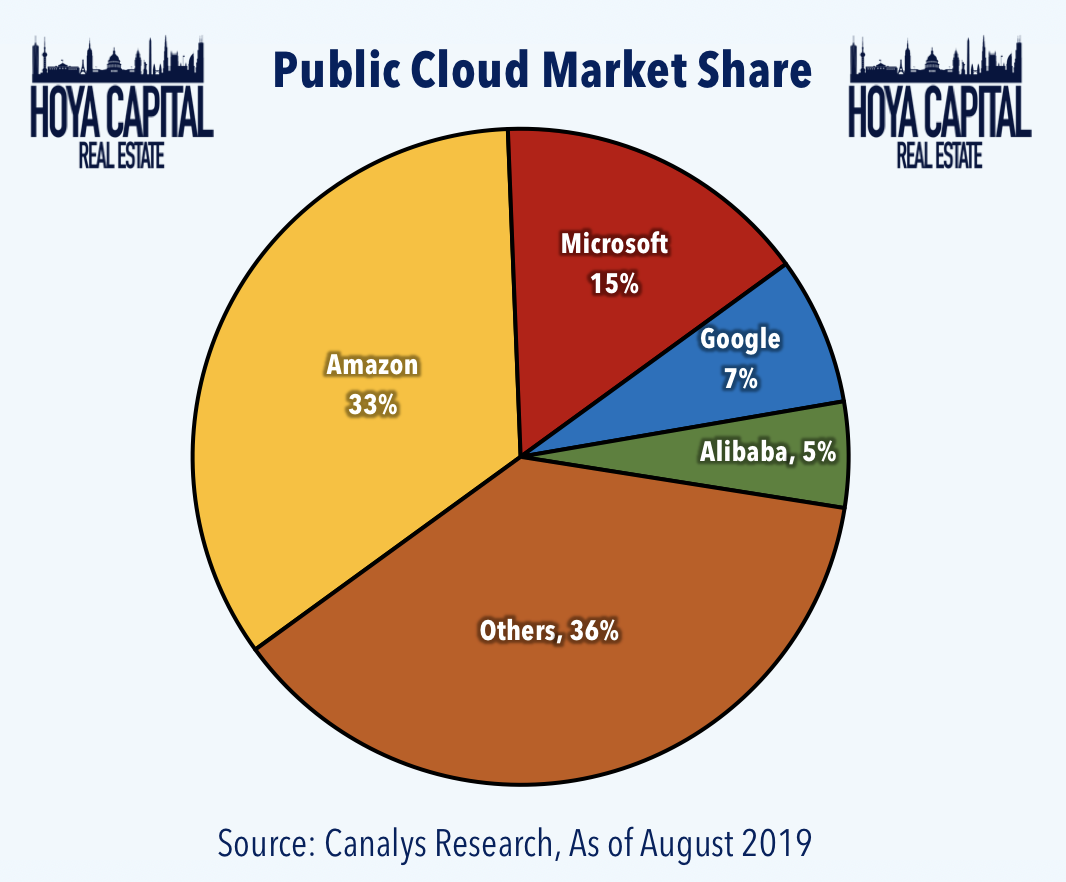

Data center providers with double the share of their closest competitor lead their markets in profitability. The reason is the relationship between market share and profit margin or return on investment.

That is arguably true in the connectivity and data center markets as well, though impossible to verify as Microsoft Azure never releases margin data. Alphabet profit margins are said to be quite low, in the single-digits range. AWS margins are in the 61 percent range.

Since Microsoft has never published its profit margins from public cloud services, it is hard to say for certain that the expected pattern holds.

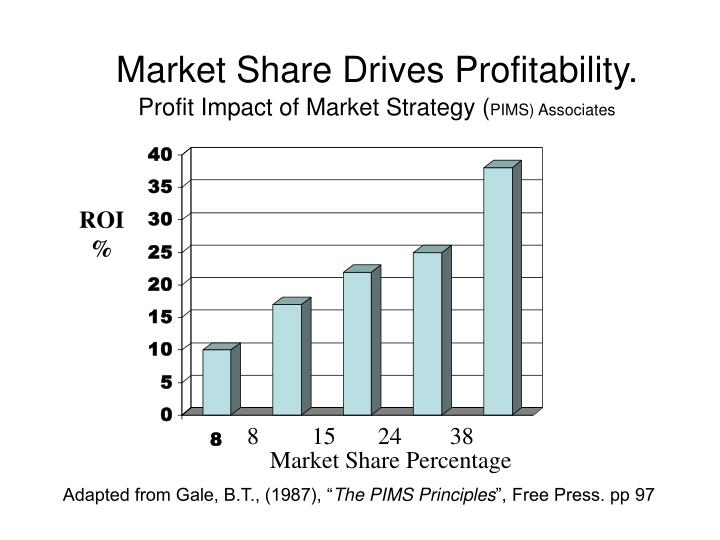

Profit margin almost always is related to market share or installed base, at least in part because scale advantages can be obtained. Most of us would intuitively suspect that higher share would be correlated with higher profits.

That is true in the connectivity and data center markets as well.

source: Harvard Business Review

But researchers also argue that market share leads to market power that also makes leaders less susceptible to price predation from competitors. There also is an argument that the firms with largest shares also outperform because they have better management talent. PIMS researchers might argue that better management leads to outperformance. Others might argue the outperformance attracts better managers, or at least those perceived to be “better.”

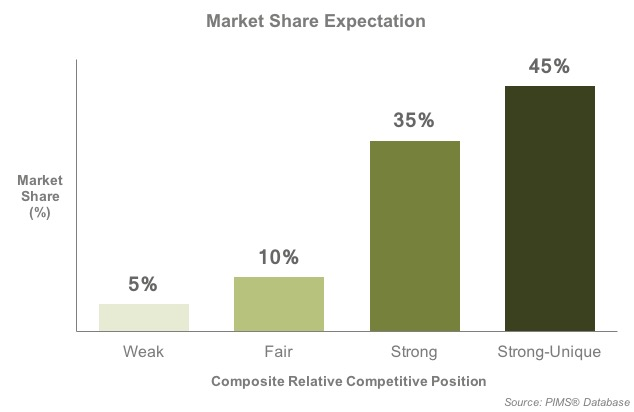

Without a doubt, firms with larger market shares are able to vertically integrate to a greater degree. Apple, Google, Meta and AWS can create their own chipsets, build their own servers, run their own logistics networks.

source: Slideserve

The largest firms also have bargaining power over their suppliers. They also may be able to be more efficient with marketing processes and spending. Firms with large share can use mass media more effectively than firms with small share.

Firms with larger share can afford to build specialized sales forces for particular product lines or customers, where smaller firms are less able to do so. Firms with larger share also arguably benefit from brand awareness and preferences that lessen the need to advertise or market as heavily as lesser-known and smaller brands with less share.

Firms with higher share arguably also are able to develop products with multiple positionings in the market, including premium products with higher sales prices and profit margins.

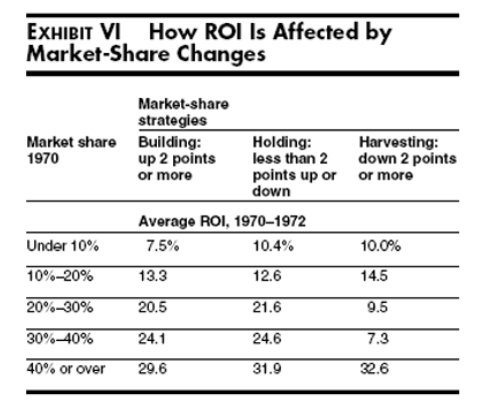

source: Contextnet

That noted, the association between higher share and higher profit is stronger in industries selling products purchased infrequently. The relationship between market share and profit is less strong for firms and industries selling frequently-purchased, lower-value, lower-priced products where the risk of buying alternate brands poses low risk.

The relationships tend to hold in markets where firms are spending to gain share; where they are mostly focused on keeping share or where they are harvesting products that are late in their product life cycles.

source: Harvard Business Review

The adage that nobody gets fired for buying IBM” or Cisco or any other “safe” product in any industry is an example of that phenomenon for high-value, expensive and more mission-critical products.

For grocery shoppers, house brands provide an example of what probably drives the lower relationship between share and profit for regularly-purchased items. Many such products are actually or nearly commodities where brand value helps, but does not necessarily ensure high profit margins.

On the other hand, in industries with few buyers--such as national defense products--profit margin can be more compressed than in industries with highly-fragmented buyer bases.

Studies such as the Profit Impact of Market Strategies (PIMS) have been looking at this for many decades. PIMS is a comprehensive, long-term study of the performance of strategic business units in thousands of companies in all major industries.

The PIMS project began at General Electric in the mid-1960s. It was continued at Harvard University in the early 1970s, then was taken over by the Strategic Planning Institute (SPI) in 1975.

Over time, markets tend to consolidate, and they tend to consolidate because market share is related fairly directly to profitability.

One rule of thumb some of us use is that the profits earned by a contestant with 40-percent market share is at least double that of a provider with 20-percent share.

And profits earned by a contestant with 20--percent share are at least double the profits of a contestant with 10-percent market share.

This chart shows that for connectivity service providers, market share and profit margin are related. Ignoring market entry issues, the firms with higher share have higher profit margin. Firms with the lowest share have the lowest margins.

source: Techeconomy

In facilities-based access markets, there is a reason a rule of thumb is that a contestant must achieve market share of no less than 20 percent to survive. Access is a capital-intensive business with high break-even requirements.

At 20 percent share, a network is earning revenue from only one in five locations passed. Other competitors are getting the rest. At 40 percent share, a supplier has paying customers at four out of 10 locations passed by the network.

That allows the high fixed costs to be borne by a vastly-larger number of customers. That, in turn, means significantly lower infrastructure cost per customer.