The name change from Facebook to Meta illustrates why remote computing and computing as a service are driving computing to the edge.

“The metaverse is a shared virtual 3D world, or worlds, that are interactive, immersive, and collaborative,” says Nvidia.

Facebook says “the metaverse will feel like a hybrid of today’s online social experiences, sometimes expanded into three dimensions or projected into the physical world.”

As 3D in the linear television world has been highly bandwidth intensive, so are metaverse applications expected to feature needs for lots of bandwidth. As fast-twitch videogaming has been reliant on low latency response, metaverse applications will require very low latency. As web pages are essentially custom built for each individual viewer based on past experience, so metaverse experiences will be custom built for each user, in real time, often requiring content and computing resources from different physical locations.

All of that places new emphasis on low latency response and high bandwidth computing and communications network support. Multiverse experiences also will be highly compute intensive, often requiring artificial intelligence.

As with other earlier 3D, television, high-quality video conferencing apps and immersive games, metaverse experiences also require choices about where to place compute functions: remote or local. Those decisions in turn drive decisions about required communications capabilities.

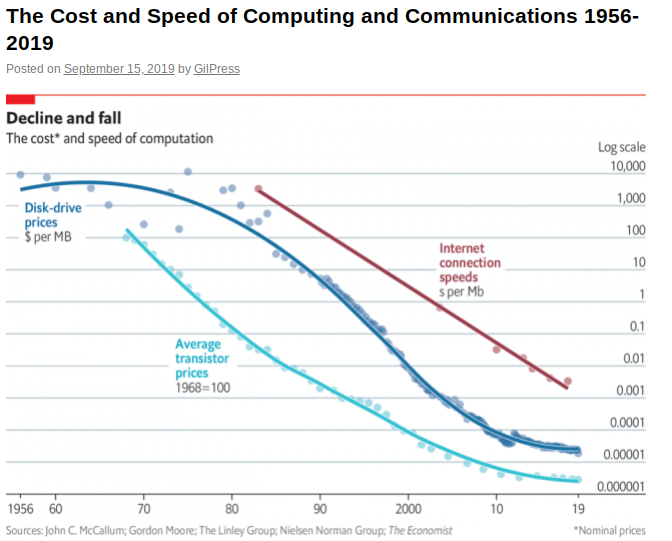

Those choices always involve cost and quality decisions, even as computational and bandwidth costs have fallen roughly in line with Moore’s Law for the last 70 years.

source: Economist, Whats the Big Data

As low computational costs created packet switching and the internet, so low computational costs support remote and local computing. Among the choices app designers increasingly face are the issues of latency performance and communications cost. Local resources inherently have the advantage for latency performance and also can be a material issue when the cost of wide area bandwidth is added. Energy footprint also varies (local versus remote computing).

On the other hand, remote computing means less investment in local servers. The point is that “remote computing plus wide area network communications” is a functional substitute for local computing, and vice versa. When performance is equivalent, designers have choices about when to use remote computing and local, with communications cost being an integral part of the remote cost case.

Metaverse use cases, on the other hand, are driven to the edge (local) for performance reasons. Highly compute-intensive use cases with low-latency requirements are, in the first instance, about performance, and then secondarily about cost.

In other words, fast compute requirements and the volume of requirements often dictate the choice of local computing. And that means metaverse apps drive computing to the edge.

source: Couchbase